News

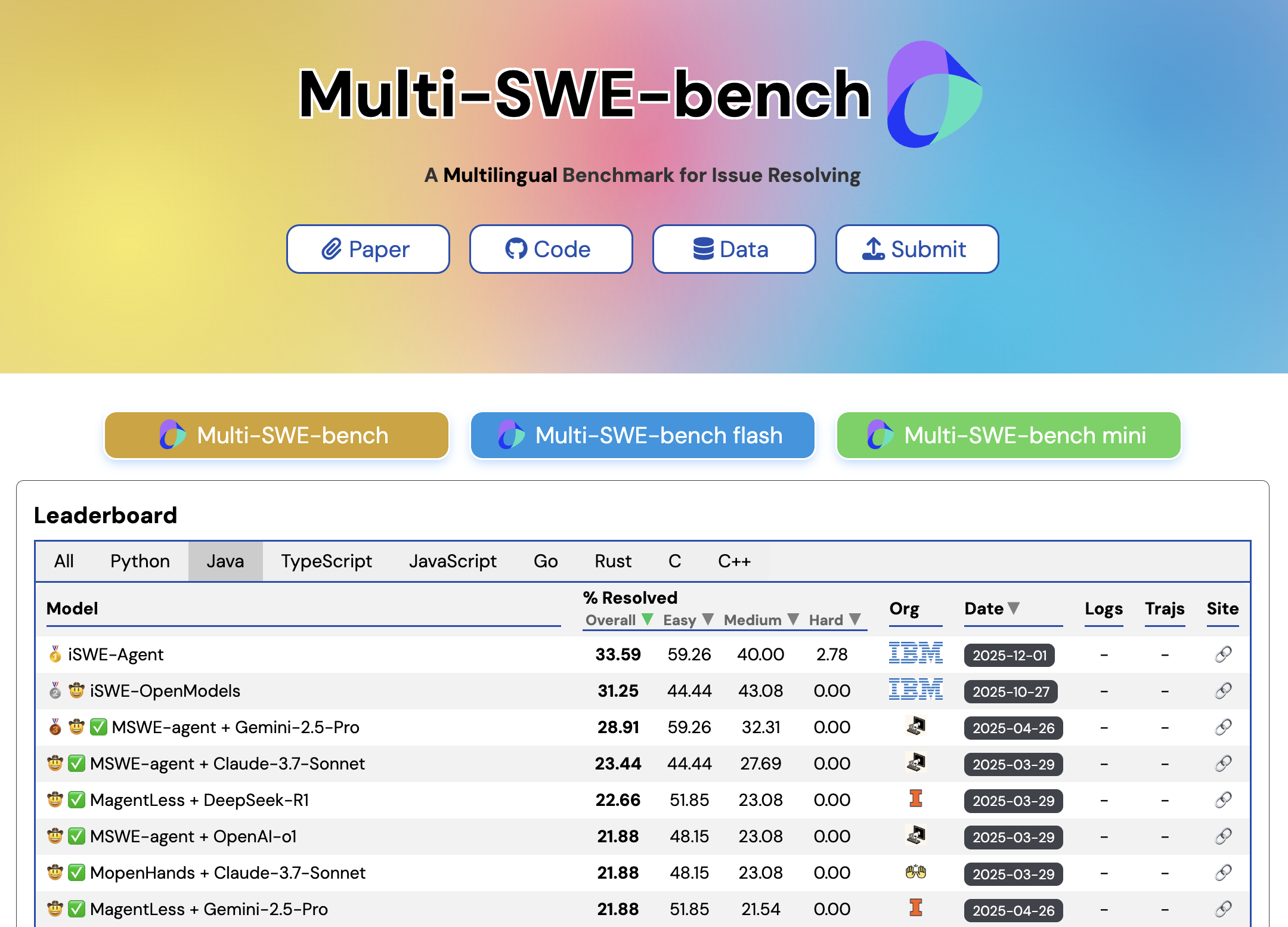

| Dec 01, 2025 | iSWE-Agent, IBM Research’s software engineering agent, achieved Rank 1 on the Multi-SWE-Bench Java leaderboard with a 33% resolution rate, substantially outperforming the previous best score of 28.9%.  iSWE-Agent achieves Rank-1 on Multi-SWE-Bench Java leaderboard (1 December 2025) Related resources: |

|---|---|

| Sep 03, 2025 | SWE-Bench-Arena, a platform for blind evaluation of AI-generated code patches, is now live. Unlike benchmarks that only measure test-pass rates, SWE-Bench-Arena evaluates patches across five production-relevant dimensions: correctness, maintainability, readability, performance, and simplicity.  SWE-Bench-Arena — blind evaluation of AI-generated code patches Related resources: |

| Oct 22, 2024 | IBM AI Agent SWE-1.0, previewed at IBM TechXchange, showcases how AI can tackle GitHub issues in minutes using only open-source LLMs. Related resources: |

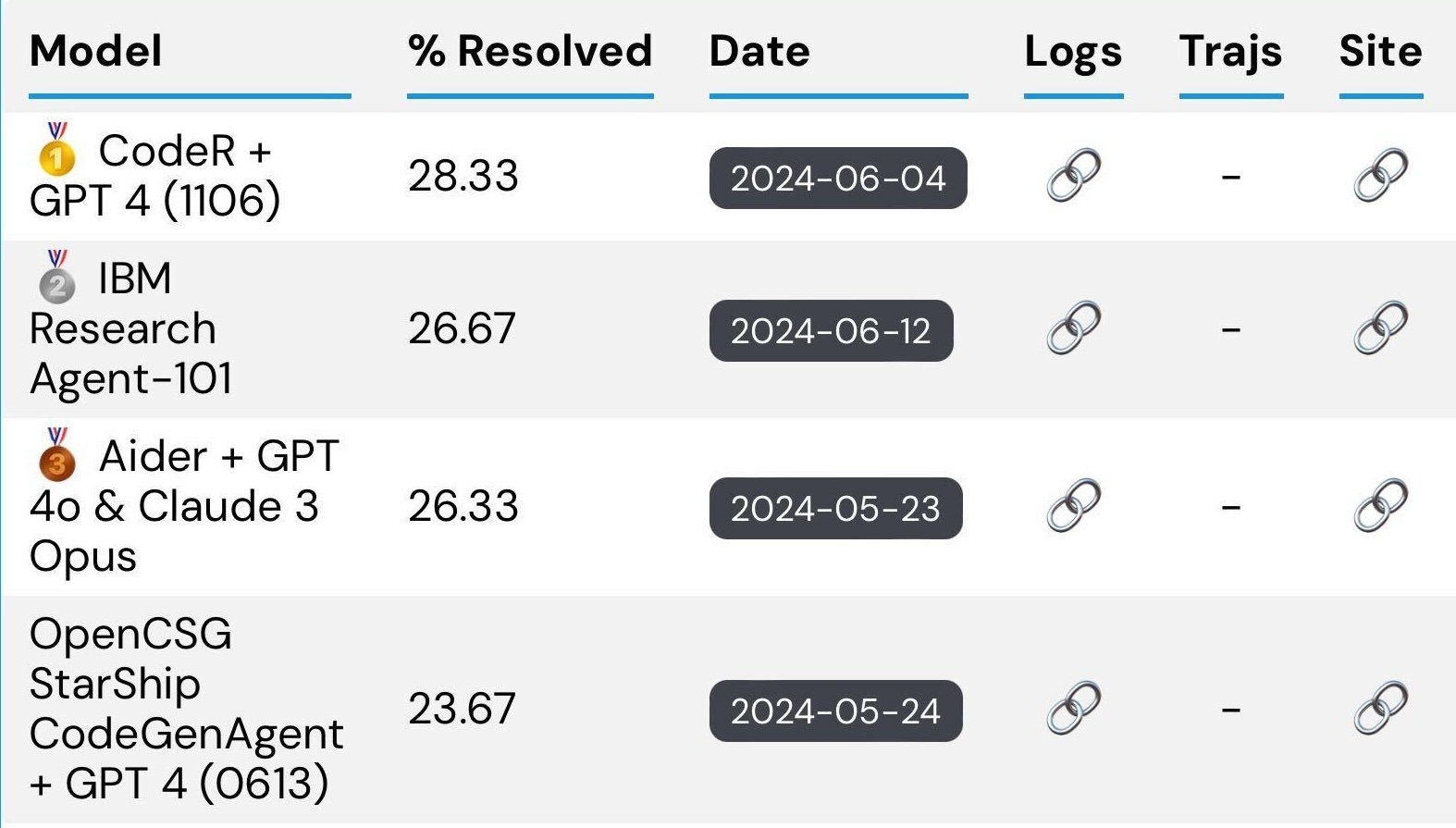

| Oct 16, 2024 | IBM AI Agent SWE-1.0, using only open-source LLMs, achieves a remarkable 23.67% resolution rate on SWE-Bench. Without relying on proprietary models, it represents a significant milestone in AI for software engineering. As the Architect and Technical Lead of this innovative solution, I’m excited to see how future SWE-Agents will build upon the foundation we’ve established with open-source LLMs. |

| Jun 12, 2024 | IBM Research’s Agent-101 achieved an impressive 26.67% success rate on the SWE-Bench leaderboard using GPT-4, ranking 2nd at the time of submission.  IBM Research Agent-101 achieves Rank-2 on SWE-Bench (12 June 2024) |